Preliminary Object Storage Benchmarks

Introduction

An object storage suitable to store over 100 billions immutable small objects was designed in March 2021. A benchmark was written and tested to demonstrate the design could deliver the expected performances.

Based on the results presented in this document, hardware procurement started in July 2021. The Software Heritage codebase is going be modified to implement the proposed object storage, starting August 2021. The benchmarks will be refactored to use the actual codebase and run on a regular basis and verify it performs as expected.

Benchmark results

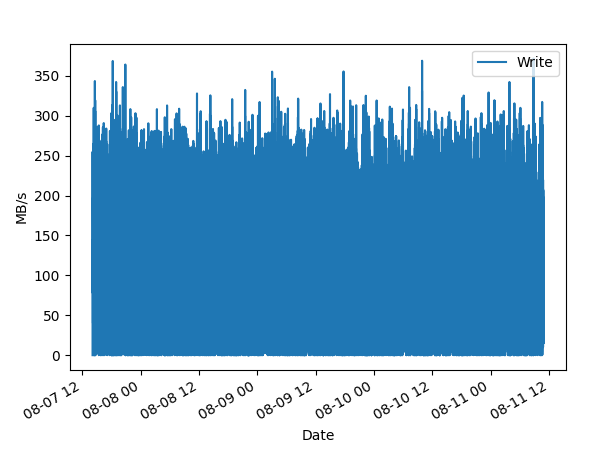

For the duration of the benchmark (5 days), over 5,000 objects per second (~100MB/s) were written to object storage.

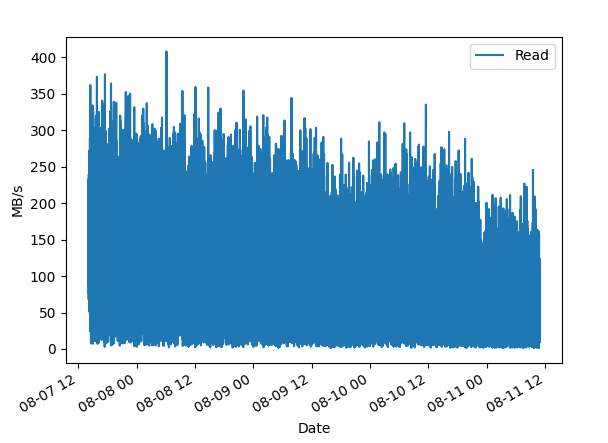

Simultaneously, over 23,000 objects per second (~100MB/s) were read, either sequentially or randomly.

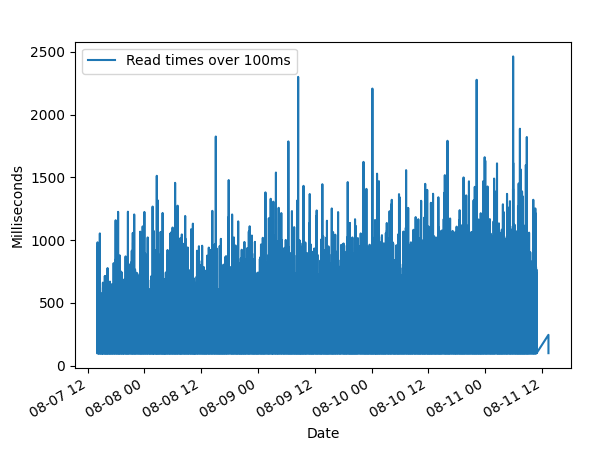

The time to randomly read a 4KB object is lower than 100ms 98% of the time. The graph below shows that the worst access time never exceeds 3 seconds over the entire duration of the benchmark.

On read performances degradation

The results show that the read performances degrade over time. This was discovered during the last run (August 2021) of the benchmark which lasted five days. All previous runs (between April and July 2021) lasted less than three days and this degradation was not detected.

A preliminary analysis suggests the root problem is not the Ceph cluster. The benchmark software does not implement throttling for reads and this could be the cause of the problem.

Hardware

The hardware provided by grid5000 consists of:

- A Dell S5296F-ON 10GB switch

- 32 Dell PowerEdge C6420

- System: 240 GB SSD SATA Samsung MZ7KM240HMHQ0D3

- Storage: 4.0 TB HDD SATA Seagate ST4000NM0265-2DC

- Intel Xeon Gold 6130 (Skylake, 2.10GHz, 2 CPUs/node, 16 cores/CPU)

- 192 GiB RAM

- One 10GB link to the switch

- 2 Dell PowerEdge R940

- System: 480 GB SSD SATA Intel SSDSC2KG480G7R

- Storage: 2 x 1.6 TB SSD NVME Dell Dell Express Flash NVMe PM1725 1.6TB AIC

- Intel Xeon Gold 6130 (Skylake, 2.10GHz, 4 CPUs/node, 16 cores/CPU)

- 768 GiB RAM

- One 10GB link to the switch

Note: The machines have additional resources (network, disk) but only those used in the context of the benchmark are listed.

Methodology

A single process drives the benchmark on a dedicated machine. It runs multiple write workers, each in a separate process (to avoid any lock contention) which report how many objects and bytes they wrote when terminating. It runs mulitple read workers in parallel, also in separate processes, because they are blocking on reads from RBD images. The read workers report how many objects they read and how many bytes in total. The objects per second and bytes per second and other relevant measures are stored in files every 5 seconds, to be analyzed when the run completes.

A write worker starts by writing objects in the Write Storage, using a payload obtained from a random source to avoid unintended gain due to easily compressible content. The Global Index is updated by adding a new entry for every object written in the Write Storage. The Global Index is pre-filled before the benchmark starts running to better reflect a realistic workload.

When the Shard is full in the Write Storage, a second phase starts and it is moved to the Read Storage. The content of the Shard is read from the database twice: once for building the index and another to obtain the content. The index and the content of the Shard are written to the RBD image in chunks of 4MB. Once finished, the Shard is deleted from the Write Storage.

A read worker opens a single Shard and either reads objects:

- at random or,

- sequentially from a random point in the Shard

In both cases it stops after reading a few megabytes to keep the processes short lived and rotate over as many Shards are possible so that the content of the Shard is unlikely to be found in the cache.

The time it takes to read an object at random is measured and they are stored in a file when it exceeds 100ms. It is interpreted as the time to first byte although it really is the time to get the entire object. However, since the objects are small, the difference is considered marginal.

The writers compete with the readers: without any reader, the writers will perform better (object per second and bytes per second). The number of read workers is set (trial and error) to deliver the desired performances (more workers means better performances) without hindering the writers.

Assumptions

- The creation of the perfect hash table for a given Shard is not benchmarked and assumed to be negligible. It is however expensive when implemented in Python and must be compiled in or implemented in C or a similar language.

- The majority of reads are sequential (for mirroring and analysis) and a minority are random (from the API)

- The large objects (i.e. > 80KB) are not taken into consideration. The small objects are the only one causing problems and having larger objects would improve the benchmarks.

- There are no huge objects because their size is capped at 100MB.

- The random source is a file created from /dev/urandom because it is too slow (~200MB/s)

- Reads only target the Read Storage although they may also target the Write Storage in a production environment. As the cluster grows, the number of objects in the Read Storage will grow while the number of objects in the Write Storage will remain constant. As a consequence the number of read using the Write Storage will become smaller over time.

Services

- Operating System Debian GNU/Linux buster

- Read Storage

- 4+2 erasure coded pool

- Ceph Octopus https://docs.ceph.com/en/octopus/rados/operations/erasure-code/

- Write Storage

- A PostgreSQL master server

- A PostgreSQL (cold) standby server replicating the master server

- PosgreSQL 11 https://www.postgresql.org/docs/11/

Writing

There are –rw-workers processes, each of them is in charge of creating a single Shard. When a worker completes, another one is created immediately. It stops after –file-count-ro Shards have been created.

The size of each Shard is at least –file-size MB and the exact number of objects it contains depends on the random distribution of the object size.

- Creating the Shard in Write Storage

- Create a database named after a random UUID

- Add objects to the database, up to –file-size MB

- The size distribution of the objects in the database mimics the distribution described in the object storage design

- The content of the objects is random

- When a Shard is full in the Write Storage

- Create a RBD image named after the UUID and mount it

- Iterates over the content of the Shard

- Write an index at the beginning of the RBD image

- Write the content of each object after the index

- Remount the RBD image readonly

Reading

There are –ro-workers processes. Each process picks a Shard from the Read Storage at random and a workload (sequential or random).

- Random workload

- Seek to a random position in the Shard

- Read an object

- The size distribution of the objects in the database mimic the distribution described in the object storage design

- Sequential workload

- Seek to a random position in the Shard

- Read 4MB objects sequentially

- Assume objects have a size of 4KB (the median object size)