A practical approach to efficiently store 100 billions small objects in Ceph

The Software Heritage project mission is to collect, preserve and share all software that is available in source code form, with the goal of building a common, shared infrastructure at the service of industry, research, culture and society as a whole. As of February 2021 it contains 10 billions unique source code files (or “objects”, in the following) totaling ~750TB of (uncompressed) data and grows by 50TB every month. 75% of these objects have a size smaller than 16KB and 50% have a size smaller than 4KB. But these small objects only account for ~5% of the 750TB: 25% of the objects have a size > 16KB and occupy ~700TB.

The desired performances for 10PB and 100 billions objects are as follows:

- The clients aggregated together can write at least 3,000 objects/s and at least 100MB/s.

- The clients aggregated together can read at least 3,000 objects/s and at least 100MB/s.

- There is no space amplification for small objects.

- Getting the first byte of any object never takes longer than 100ms.

- Objects can be enumerated in bulk, at least one million at a time.

- Mirroring the content of the Software Heritage archive can be done in bulk, at least one million objects at a time.

Using an off-the-shelf object storage such as the Ceph Object Gateway or MinIO does not meet the requirements:

- There is a significant space amplification for small objects: at least 25%, depending on the object storage (see “How does packing Objects save space?” below for details)

- Mirroring the content of the archive can only be done one object at a time and not in bulk which takes at least 10 times longer (see “How does packing Objects help with enumeration?” for details)

A new solution must be implemented by re-using existing components and made available for system administrators to conveniently deploy and maintain in production. There are three ways to do that:

- Contribute packaging and stable releases to a codebase such as Ambry.

- Modify an object storage such as MinIO to support object packing.

- Get inspiration from an object storage design.

For reasons explained below (see “Storage solutions and TCO”), it was decided to design a new object storage and implement it from scratch.

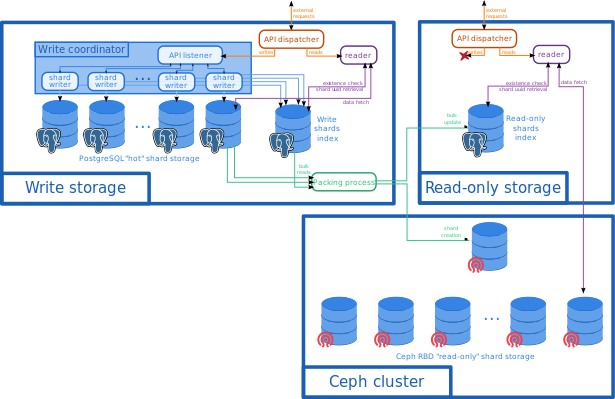

Proposed object storage design

In a nutshell, objects are written to databases running on a fixed number of machines (the Write Storage) that can vary to control the write throughput. When a threshold is reached (e.g. 100GB) all objects are put together in container (a Shard), and moved to a readonly storage that keeps expanding over time. After a successful write, a unique identifier (the Object ID) is returned to the client. It can be used to read the object back from the readonly storage. Reads scale out because the unique identifiers of the objects embed the name of the container (the Shard UUID). Writes also scales out because the Database is chosen randomly. This is the Layer 0.

Clients that cannot keep track of the name of the container can rely on an API that relies on an index mapping all known objects signatures (the Object HASH below) to the name of the container where they can be found. Although this index prevents scaling out writes, the readonly storage can still scale out by multiplying copies of the index as needed. This is the Layer 1.

Layer 0 scales out

+--- write op ----+ +--- read op ----+

v ^ v ^

Object & | | |

Object HASH Object ID Object ID Object

| Object HASH | |

v Shard UUID v ^

| | | |

v ^ v ^

+---- Write Storage --------+ +---- Read Storage --------+

| | | |

| +----------+ | | +-------+ +-------+ |

| | Database |->--Packing->----> | Shard | | Shard | |

| +----------+ | | +-------+ +-------+ |

| +----------++----------+ | | +-------+ +-------+ |

| | Database || Database | | | | Shard | | Shard | |

| +----------++----------+ | | +-------+ +-------+ |

| | | +-------+ +-------+ |

+---------------------------+ | | Shard | | Shard | |

| +-------+ +-------+ |

| ... |

+--------------------------+

Layer 1 reads scale out

+---- Write Storage --------+ +---- Read Storage ---------+

| | | |

|+-------------------------+| |+-------------------------+|

||Object HASH to Shard UUID|| ||Object HASH to Shard UUID||

|| index |>>>>| index ||

|+-------------------------+| |+-------------------------+|

+---------------------------+ |+-------------------------+|

| | ||Object HASH to Shard UUID||

^ v || index ||

| | |+-------------------------+|

^ v | ... |

Object | +---------------------------+

Object HASH v | |

| | ^ v

^ v | |

| | Object HASH Object

^ v | |

| | ^ v

+--- write op ----+ +--- read op ----+

Glossary

- Object: an opaque sequence of bytes.

- Object HASH: the hash of an Object, e.g., the checksum part of a SWHID.

- Shard: a group of Objects, used to partition the full set of objects into manageable subsets.

- Shard UUID: the unique identifier of a Shard, as a UUID.

- Object ID: a pair made of the Object HASH and the Shard UUID containing the object.

- Global Index: a table mapping the Object HASH to the Shard UUID that contains the Object.

- Read Storage: the unlimited size storage from which clients can only read Objects. It only contains Objects up to a given point in time.

- Write Storage: the fixed size storage from which clients can read or write. If an Object is not found in the Write storage, it must be retrieved from the Read Storage.

- Object Storage: the content of the Write Storage and the Read Storage combined.

- Database: PostgreSQL, Cassandra, etc.

- Ceph: a self-healing distributed storage.

- RBD image: a Ceph block storage that can either be used via the librbd library or as a block device from /dev/rbd.

- TCO: Total Cost of Ownership

The key concepts are:

- Packing millions of Objects together in Shards to:

- save space and,

- efficiently perform bulk actions such as mirroring or enumerations.

- Two different storage:

- Read Storage that takes advantage of the fact that Objects are immutable and never deleted and,

- Write Storage from which Shards are created and moved to the Read Storage.

- Identifying an object by its Object HASH and the Shard UUID that contains it so that its location can be determined from the Object ID.

While the architecture based on these concepts scales out for writing and reading, it cannot be used to address Objects with their Object HASH alone which is inconvenient for a number of use cases. An index mapping the Object HASH to the Shard UUID must be added to provide this feature, but it does not scale out writes.

The content of the Object Storage (i.e., the Write Storage and the Read Storage combined) is strongly/strictly consistent. As soon as an Object is written (i.e., the write operation returns to the client), a reader can get the Object content from the Object Storage (with the caveat that it may require looking up the object from both the Write Storage and Read Storage).

The Read Storage is eventually consistent. It does not contain the latest Objects inserted in the Write Storage but it will, eventually. It contains all objects inserted in the Object Storage, up to a given point in time.

Layer 0 (Object lookup require a complete Object ID)

Architecture

- Write Storage:

- A fixed number of Databases

- Read Storage:

- Shards implemented as Ceph RBD images named after their Shard UUID

- The content of the Shard uses a format that allows retrieving an Object in O(1) given the Object HASH

Writing

The Object is stored in one of the Databases from the Write Storage. The Database is chosen at random. A database is associated with a unique Shard UUID, chosen at random. All Objects written to a Database will be stored in the same Shard.

A successful Object write returns the Object ID. Writing the same object twice may return different Object IDs. The Object HASH will be the same because it is based on the content of the Object. But the Shard in which the Object is stored may be different since it is chosen at random.

Packing

When a Database grows bigger than a threshold (for instance 100GB), it stops accepting writes. A Shard is created in the Read Storage and Objects in the Database are sorted and copied to it. When the Shard is complete, the Database is deleted. Another Database is created, a new Shard UUID is allocated and it starts accepting writes.

Reading

The Shard UUID is extracted from the Object ID. If a Shard exists in the Read Storage, the Object HASH is used to lookup the content of the Object. Otherwise the Database that owns the Shard UUID is looked up in the Write Storage and the Object HASH is used to lookup the content of the Object. If the reader is not interested in the most up to date content, it can limit its search to the Read Storage.

Layer 1 (Objects can be looked up using the Object HASH alone)

A Global Index mapping the Object HASH of all known Objects to the Shard UUID is used to:

- allow clients to fetch Objects using their Object HASH only instead of their Object ID.

- deduplicate identical Objects based on their Object HASH

Architecture

- Write Storage:

- Read/write Global Index of all known Objects in the Write Storage and the Read Storage

- Read Storage:

- Read/write Global Index of all known Objects in the Read Storage

- Multiple readonly replicas of the Global Index of all known Objects in the Read Storage

Writing

If the Object HASH exists in the Read Storage Global Index, do nothing. Otherwise perform the write and add the Object ID to the Write Storage Global Index. There may be duplicate Objects in the Write Storage. It is expected that they race to be inserted in the Write Storage Global Index.

Packing

During packing, each Object HASH is looked up in the Read Storage Global Index. If it exists, the object is discarded. Otherwise its Object ID is added to the Read Storage Global Index. When packing is complete:

- Readonly replicas of the Read Storage Global Index are updated with the newly added Object IDs.

- Object HASH that were found to be duplicate are updated in the Write Storage Global Index. The Object HASH is mapped to the Shard UUID retrieved from the Read Storage Global Index.

Reading

If the Object HASH is found in the Read Storage Global Index, use the Shard UUID to read the Object content from the Shard found in the Read Storage. Otherwise lookup the Object HASH from the Write Storage Global Index and read the content of the Object from the Database that owns the Shard UUID.

How does packing Objects save space?

The short answer is: it does not when Objects are big enough, but it does when there are a lot of small Objects.

If there are billions of objects (i.e., less than one billion is not a lot) and 50% of them have a size smaller than 4KB and 75% of them have a size smaller than 16KB (i.e., bigger than 16KB is not small), then packing will save space.

In the simplest method of packing (i.e., appending each Object after another in a file) and since the Object HASH has a fixed size, the only overhead for each object is the size of the Object (8 bytes). Assuming the Shard containing the Objects is handled as a single 100GB Ceph RBD Image, it adds R bytes. If the underlying Ceph pool is erasure coded k=4,m=2 an additional 50% must be added.

Retrieving an Object from a Shard would be O(n) in this case because there is no index. It is more efficient to add a minimal hash table to the Shard so that finding an object is O(1) instead. That optimization requires an additional 8 bytes per Object to store their offset, i.e. a total of 16 bytes per object.

If Objects are not packed together, each of them requires at least B bytes, which is the minimum space overhead imposed by the underlying storage system. And an additional 50% for durability. The space used by Objects that are smaller than a given threshold will be amplified, depending on the underlying storage. For instance all objects in Ceph have a minimum size of 4KB, therefore the size of a 1KB Object will be amplified to 4KB which translates to a 35% space amplification. Another example is MinIO with over 200% space amplification or Swift for which packing small files was recently proposed.

To summarize, the overhead of storing M Objects totaling S bytes with M=100 billions and S=10PB is:

- packed: ~15.5PB

- (S / 100GB) * R == (10PB / 100GB) * R bytes = 10,000 * R bytes

- (M * 24) = 100G Objects * 24 bytes = 2.4TB

- 50% for durability = 10PB * 0.5 = 5PB

- not packed: ~17.5PB based on the optimistic assumption that the storage system has a 25% space overhead for small files

- 25% for space amplification = 10PB * 0.25 = 2.5PB

- 50% for durability = 10PB * 0.5 = 5PB

How does packing Objects help with enumeration?

For mirroring or running an algorithm on all objects, they must be enumerated. If they are not packed together in any way, which is the case with MinIO or Swift, they must be looked up individually. When they are packed together (one million or more), the reader can download an entire Shard instead, saving the accumulated delay imposed by millions of individual lookup.

If looking up an individual Object takes 10 milliseconds and Shards can be read at 100MB/s:

- Getting 1 billion objects requires 10 millions seconds which is over 100 days.

- One billion objects is 1/10 of the current content of Software Heritage, i.e. ~75TB which can be transferred by reading the Shards in less than 10 days

Storage solutions and TCO

When looking for off-the-shelf solutions all options were considered, including distributed file systems such as IPFs and more and most of them were discarded because they had at least one blocker that could not be fixed (e.g. no feature to guarantee the durability of an object). In the end a few remained, either including the following features or with the possibility for a third party to contribute them back to the project:

- Scale to 100 billions objects

- Provide object packing

- Provide detailed documentation and community support for system administrators operating the storage

- Be thoroughly tested before a stable release is published

- Be packaged for at least one well known distribution

- Have stable releases maintained for at least two years

- A sound approach to address security problems (CVE etc.)

| Name | RGW | SeaweedFS | MinIO | Swift | Ambry |

|---|---|---|---|---|---|

| Scaling | Yes | Yes | Yes | Yes | Yes |

| Packing | No | Yes | No | No | Yes |

| Documentation | Good | Terse | Good | Good | Terse |

| Tests | Good | Few | Average | Good | Few |

| Packages | Yes | No | No | Yes | No |

| Stable releases | Yes | No | Yes | Yes | No |

| Security | Yes | No | Yes | Yes | No |

Does not have stable releases and testing

The performance goals, size distribution and the number of objects in Software Heritage are similar to what is described in the 2010 article “Finding a needle in Haystack: Facebook’s photo storage” that motivated the implementation of SeaweedFS in 2013 or Ambry, the object storage published in 2017 by LinkedIn to store and serve trillions of media objects in web companies.

Contributing to SeaweedFS or Ambry so they can be deployed and maintained would require:

- Creating packages for the target Operating System (e.g. Debian GNU/Linux), maintaining a repository to distribute them, upload them to the official distribution repository so that they are available in the next stable release (about two years from now)

- Creating Ansible roles or Puppet modules for deployment on multiple machines

- Improving the documentation with a configuration and architecture guide to deploy at scale

- Discuss with upstream to create stable releases, define their lifecycle and organize release management

- Establish a security team in charge of handling the CVE

- Setup and infrastructure and create the software for integration testing to be run before a stable release is published to reduce the risk of regressions or data loss. This is specially important because a significant part of the software is dedicated to data storage and replication: bugs can lead to data loss or corruption.

Does not provide object packing

MinIO and Swift suffer from a space amplification problem and they do not provide object packing. Although Ceph Object Gateway (also known as RGW) stores objects in RocksDB instead of files, it also suffers from a space amplification problem and does not provide object packing.

Contributing to RGW, MinIO or Swift to add object packing would require:

- Creating a blueprint to modify the internals to add object packing

- Discuss with upstream to validate the blueprint

- Implement the blueprint and the associated tests

Estimating the TCO

Since no solution can be used as is, some work must be done in each case and the effort it requires should be compared. It is however difficult because the nature of the effort is different. The following factors were considered and aggregated in a TCO estimate.

- Data loss risk: if a bug in the work done implies the risk of losing data, it makes the work significantly more complicated. It is the case if packing must be implemented in the internals of an existing object storage such as Swift. It is also the case if an object storage does not have integration testing to verify upgrading to a newer version won’t lead to a regression, which is the case with Ambry. It is likely that the Ambry upstream has extensive integration testing but they are not published.

- Large codebase: a large codebase means modifying it (to implement packing) or distributing it (packaging and documentation) is more difficult

- Language: if the language and its environment is familiar to the developers and the system administrators, the work is less difficult

- Skills: if the work requires highly specialized skills (such as an intimate understanding of how a distributed storage system guarantees a strict consistency of the data, or running integration tests that require a cluster of machines) it is more difficult

| RGW | SeaweedFS | MinIO | Swift | Ambry | |

|---|---|---|---|---|---|

| Data loss risk | Yes | Yes | Yes | Yes | Yes |

| Large codebase | Yes | Yes | Yes | Yes | Yes |

| Language | C++ | Go | Go | Python | Java |

| Skills | Medium | High | High | High | High |

| TCO estimate | High | High | High | High | High |

In a nutshell, implementing a system from scratch has the lowest TCO estimate, primarily because it is independent of the underlying distributed storage.